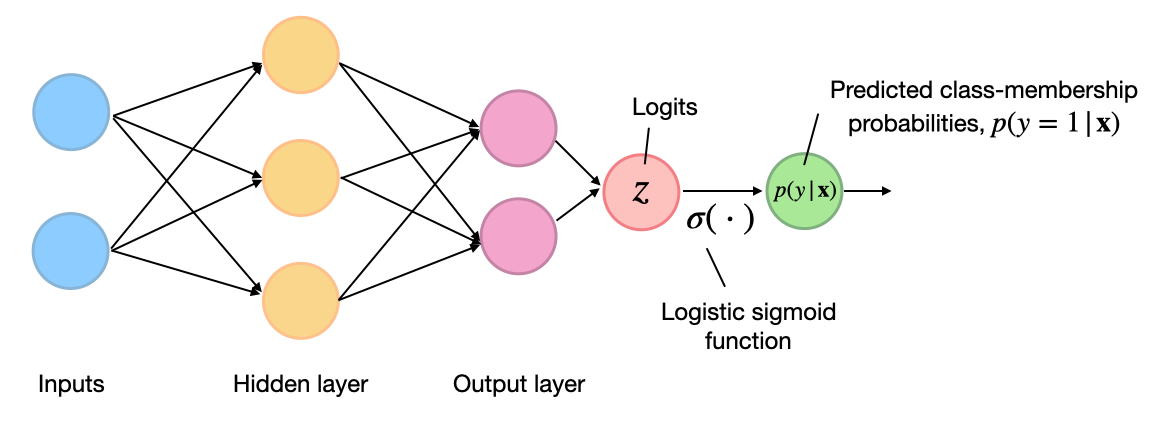

Connections: Log Likelihood, Cross Entropy, KL Divergence, Logistic Regression, and Neural Networks – Glass Box

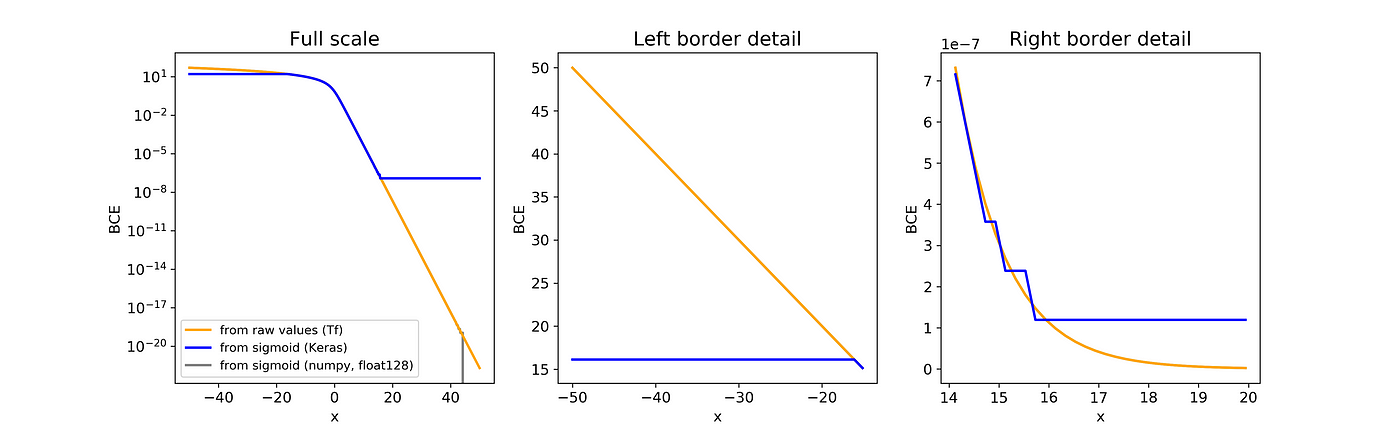

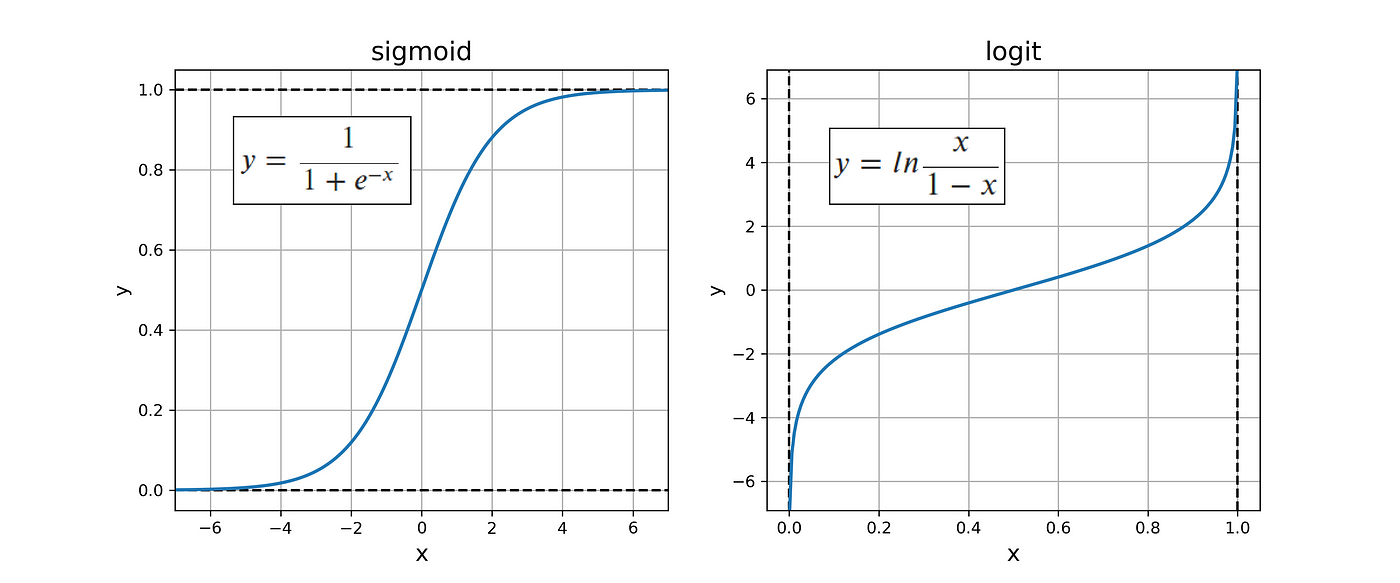

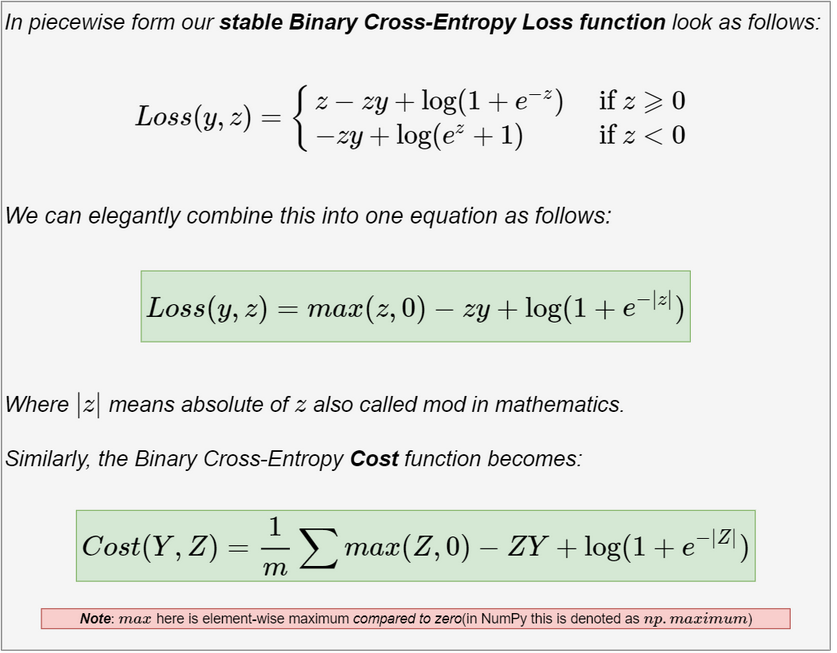

Sigmoid Activation and Binary Crossentropy —A Less Than Perfect Match? | by Harald Hentschke | Towards Data Science

How do Tensorflow and Keras implement Binary Classification and the Binary Cross-Entropy function? | by Rafay Khan | Medium

backpropagation - How is division by zero avoided when implementing back-propagation for a neural network with sigmoid at the output neuron? - Artificial Intelligence Stack Exchange

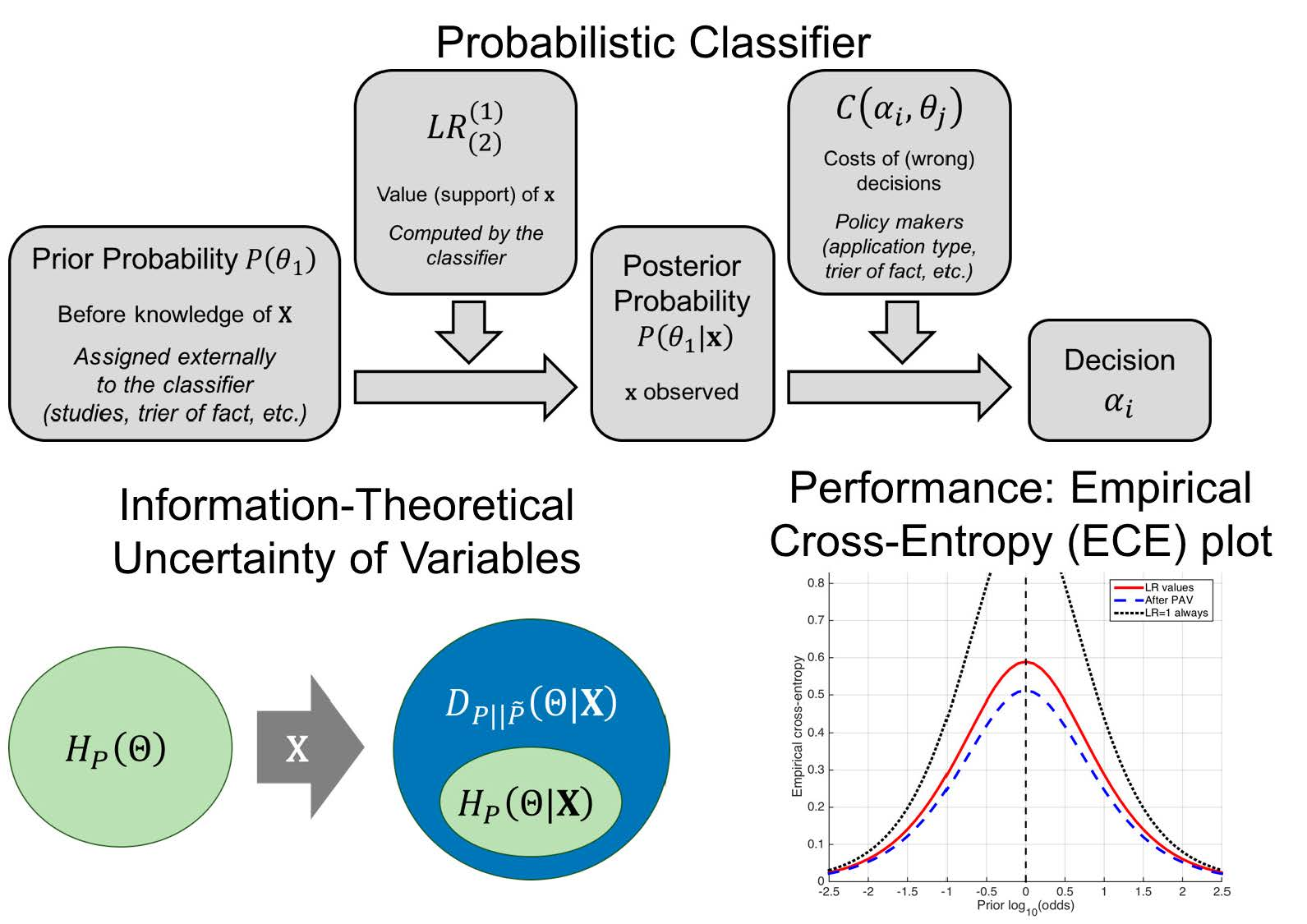

Connections: Log Likelihood, Cross Entropy, KL Divergence, Logistic Regression, and Neural Networks – Glass Box

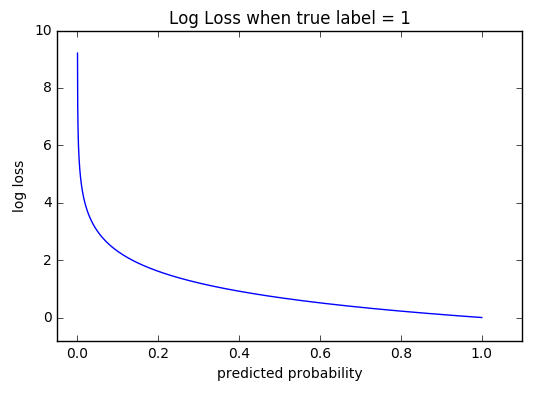

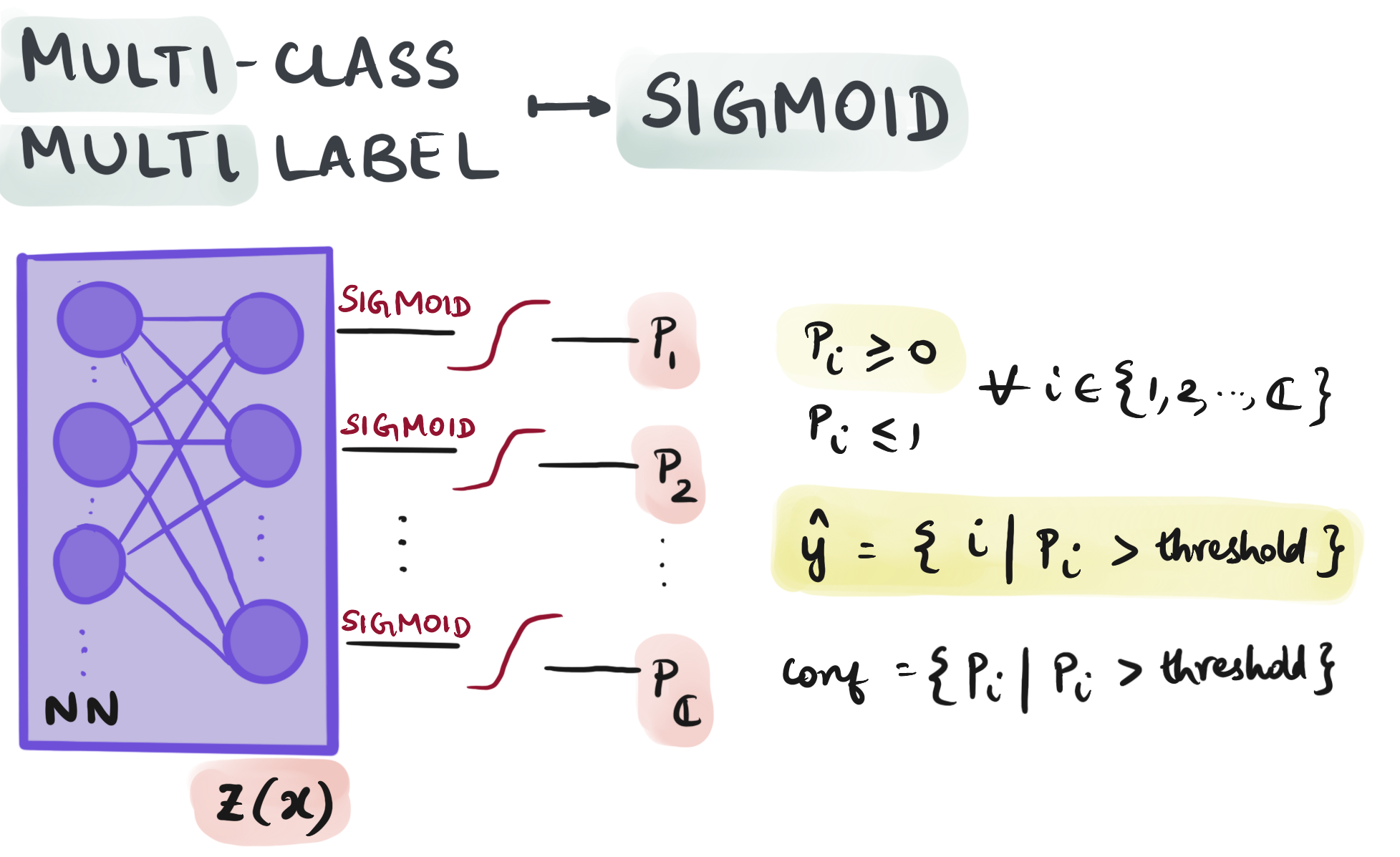

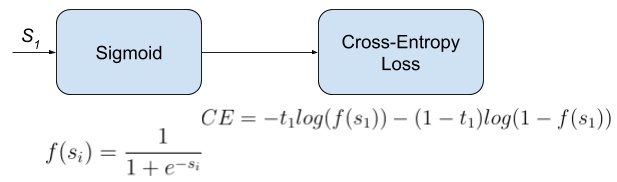

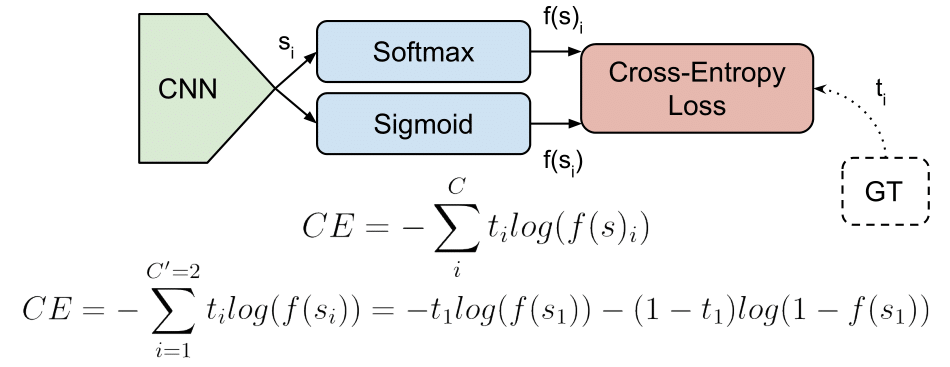

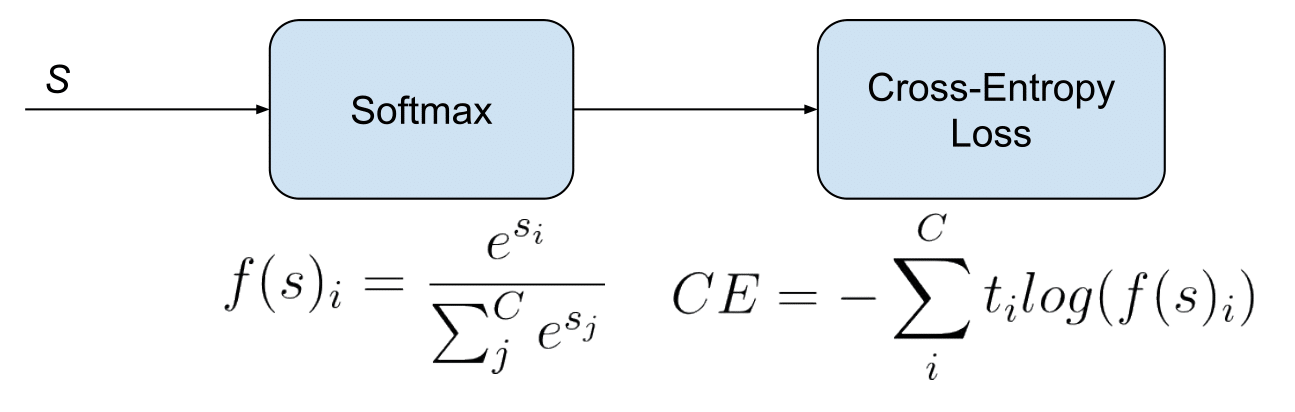

Understanding Categorical Cross-Entropy Loss, Binary Cross-Entropy Loss, Softmax Loss, Logistic Loss, Focal Loss and all those confusing names

Understanding Categorical Cross-Entropy Loss, Binary Cross-Entropy Loss, Softmax Loss, Logistic Loss, Focal Loss and all those confusing names

The learning curves for the sigmoid cross entropy loss and the graph... | Download Scientific Diagram

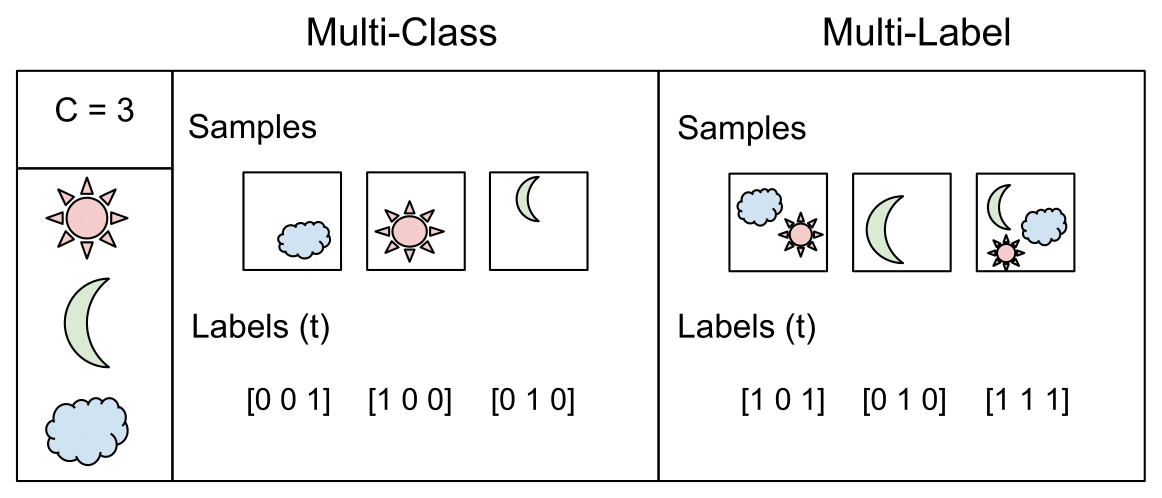

Understanding Categorical Cross-Entropy Loss, Binary Cross-Entropy Loss, Softmax Loss, Logistic Loss, Focal Loss and all those confusing names

Understanding Categorical Cross-Entropy Loss, Binary Cross-Entropy Loss, Softmax Loss, Logistic Loss, Focal Loss and all those confusing names

Understanding Categorical Cross-Entropy Loss, Binary Cross-Entropy Loss, Softmax Loss, Logistic Loss, Focal Loss and all those confusing names